Enjoying the newsletter? Gimme $2 for sea-monkeys.

This week’s question comes to us from Dana Chisnell:

How do I get through a day without hearing or seeing anything about AI?

Let’s talk about basketball. My very favorite day of the year is the Saturday the NBA Playoffs kick off, which this year fell on April 19th. (Holy shit, the playoffs last forever.) On Playoff Saturday I get to watch four back-to-back-to-back-to-back basketball games starting at 10am. Same thing on Sunday. Then they start scattering them throughout the week. But that first weekend is pure joy and chaos. You’ve got teams pacing themselves because they believe they’re making a deep run, you’ve got teams that know their only chance to make it to the next round is to do very weird things that the other team isn’t expecting, and you’ve got guys on those latter teams playing for new contracts so they’re doing even weirder things to get noticed. All of this makes for very entertaining basketball. Which is not what you asked me about. We’re getting there.

This also means that for the last two months I’ve been watching a lot of TV ads. I am now an expert on three things: online gambling, GLP-1 drugs, and AI.

Here’s the thing about AI ads: they’re amazing. According to some of the ads I’ve seen, AI will help you write a paper, grade your students’ papers, sheetrock your wall, raise your children, send out invoices, design your website, write your résumé, schedule your aunt’s funeral, give you a good recipe for chicken wings, help you put together an outfit that slays, help you build a LEGO, write a sales report, summarize a sales report, tell you what kind of music you like, put together a good dating profile, teach you how to fire a gun, tell you who to vote for, and recite interesting facts about Hitler.

Here’s the thing about actual AI: aside from the Hitler part, it does precious fucking little of that. But it demos really really well. It’s really easy to make a good AI ad. You come up with a thing that a lot of people hate doing, you decide that AI can do that thing, then you shoot the ad that backs it up. You show it 17 times during Game 6 of the Knicks/Pacers series and 8 million people see it. Subtract a percentage of people who naturally distrust whatever is being advertised on TV and even if that number is 50%, you’ve still convinced 4 million people that Google AI can tell you how to repair a giant hole in your living room wall, which it cannot.

When I was a kid I was mesmerized by the comic book ads for Sea-Monkeys. “Enter the wonderful world of amazing live Sea-Monkeys! Own a bowlful of happiness! Instant pets!” This was the headline next to an amazing illustration of a family of Sea Monkeys posing in front of their Sea Monkey castle. With their big smiles, three-antennae heads, Sea-Monkey dad’s tail strategically covering his Sea-Monkey dick, and Sea-Monkey mom looking like she could get it, with her little Mary Tyler Moore flip-do. There was another illustration of a human family, straight out of the John Birch society playbook overseeing their Sea-Monkeys living in a fishbowl. Reader, I wanted these Sea-Monkeys. They were going to be my new family. So I waited for my Dad to be in a good mood (about to leave for the evening) and I asked him for a dollar. “Only $1.00!” just like the ad told me to say. I filled out the coupon in the ad very very carefully, cut it out very carefully with my mom’s good scissors, put it in an envelope, which I also addressed very carefully, asked my mom for a stamp, and the next day I deposited the envelope in a mailbox on my way to school. Then I waited. And waited. And waited some more.

About 6–8 weeks later I received an envelope back. I ran to my room, where I had a goldfish bowl ready and waiting, opened the envelope, which contained a second smaller envelope, and dumped the contents of that envelope into the fishbowl. I watched as maybe three tiny-as-fuck brine shrimp made their way slowly through the water in the fishbowl, and landed on the bottom with the sound of a deflating dream. From the future, I saw Nelson Muntz point at me and say “ha ha!” And still, I checked that fishbowl on the hour for days. Maybe it just took a little time for my Sea-Monkey family to wake up. It never happened.

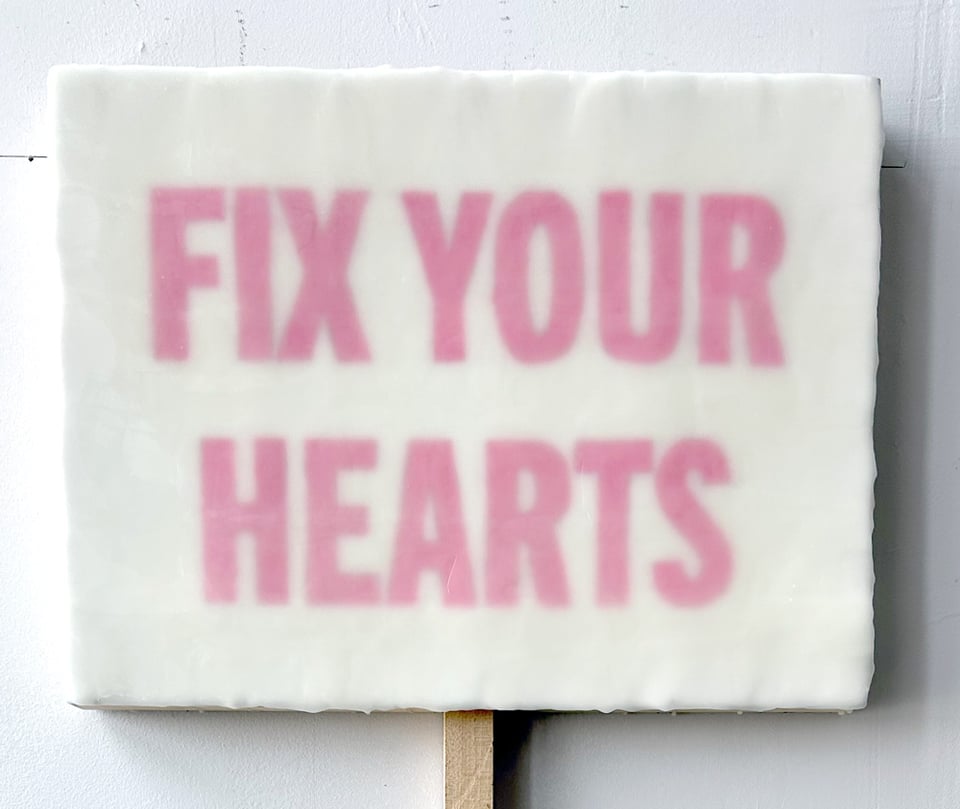

AI is Sea-Monkeys.

The promise is there and it’s exciting. The hope of new friends that will live, laugh, love in a little fishbowl next to my bed. Keeping me company. Laughing at my jokes. Saying things like “We wish you could come down here and play with us in our super cool Sea-Monkey castle.” The reality is three dead brine shrimp at the bottom of a fishbowl that your mother eventually flushes down the toilet after calling you an idiot. At least dead brine shrimp don’t tell you that Hitler had some good ideas, actually.

Can AI be useful in certain circumstances? Sure. So are brine shrimp. (They’ve been to space!) Is it all that? It’s not. AI is a Sea-Monkey ad being peddled as a promise of something that it is not.

Sea-Monkeys were my first experience with hype cycles. In retrospect, $1 was a good price for that lesson.

But again, this was not your question. And you already know that, which is why you’re asking the question. Still, it’s worth exploring why we’re seeing and hearing so much about AI right now. Ironically, this will add one more essay to the amount of essays that you’re wishing you could get away from. But having asked the question, you kind of did that to yourself.

Why are we seeing 17 AI ads during a Knicks/Pacers game? One answer is that the tech companies running the ads can afford it. (Ads run around $300k during the later rounds. That number may be wrong, it came from a Google AI summary.) But that speaks more to the how than the why. The why is simple, though. These companies need a hit, and they need a hit bad. Silicon Valley is in a slump. After striking out with blockchain, crypto, NFTs, web3, the metaverse, stupid shit you wear on your face (or more honestly, don’t wear on your face), and pretending (but not really able to actually fool anyone) that they gave a shit about minorities for a brief moment in 2020, the tech industry was losing the room. Mind you, they were still making money hand over fist, but the bloom was coming off the rose. They were coming off an insane couple of decades of innovating at a furious pace, being seen as gods, being invited to all the good parties, and settling into a mature age of “running things while making incremental improvements, with the occasional breakthrough” which, frankly, doesn’t make the cocaine flow. At the same time they were being asked hard questions about weird little things like “was your platform instrumental in a genocide in Myanmar” and “what’s with all the Nazis?” Which, to be fair, are bummer questions. Especially when you’re trying to enjoy cocaine.

So when AI got to the point of almost-kinda-sorta-semi maturity (but not really) they ran with it. Then they tossed in anything else that kinda-sorta felt like AI and tossed that onto the pile as well. Suddenly everything is AI and AI is in everything. Stuff that’s been around forever, like auto-complete and speech-to-text, is now “AI.” It’s not. Suddenly, Google Drive is asking me if it wants me to let AI write these newsletters. I don’t. Suddenly, Google search—the backbone of the internet—is a piece of shit. (OMG, were those em dashes?! Is this AI. Butterfly meme!) Suddenly, students and professors are arguing about who’s writing and grading papers. Suddenly, we’re firing up Three Mile Island so incels can generate six-fingered girlfriends that don’t give them shit for being useless. Suddenly, designers who were previously tasked with making things “user-centered” (This was never a thing, by the way, but that’s beyond the purview of today’s newsletter.) are being tasked with creating good prompts, and then staying up all night manually fixing the slop that was generated while also fearing for their livelihood because… suddenly everyone is unemployed. (Oh, did you think Silicon Valley was trying to artificially extend the bubble for your benefit? My sweet summer child.)

And the Nazi problem was solved by just becoming Nazis themselves. (What if the bug was the feature? S-M-R-T!)

I have yet to answer your question. Which is how you avoid all this shit. Well, it’s hard. Seeing that I can’t even watch a basketball game without being inundated with it. The honest answer is that you’re not going to be able to completely. At least for a little while. The enshittification of everything that previously worked just fine is still speedrunning to the lower circles of hell where venture capitalists count their money. I’m currently hanging on to an old laptop, which mostly works just fine because I know that a new one will be swarming with AI crap. I’m currently hanging on to an old phone for the same reason. Oddly, the shit they thought would re-energize our interest in these things back to a time when people would line up for the latest model of both, has seemingly had the opposite effect. And the folks getting suckered in because the ads are amazing, and because AI demos very well, will eventually realize that what they were sold, and what arrived at the door are two very different things.

But you don’t need to believe me when you can believe Roxanne Gay:

It is a tool designed to render the populace helpless, to make people doubt their innate intelligence, and to foster overreliance on technology.

AI is Sea-Monkeys. AI is hope in exchange for something that’s dead.

Fun fact I just discovered! The company that sells Sea-Monkeys still exists! They just celebrated their 65th anniversary. Good for them. They’ve rebranded to some vague environmental toy you’d find at an aquarium gift shop. But now you get the whole thing at once. No more advertising in comics. No more sending in an envelope with a dollar bill and waiting. Some things never change though. From their FAQ: I HAD A BUNCH OF SEA-MONKEYS BUT THEN THE NUMBER DWINDLED. WHY? Sadly, that’s common in nature. Many babies will hatch knowing that only the strongest will make it to adulthood.

Nature is fucking brutal.

Will AI still be with us in 65 years? Well, as much as I’m confident that anything might still be here in 65 years, sure. The hype cycle will eventually crash, and the parts of AI that actually make people’s lives easier will possibly live on, having safely extracted itself from the hype cycle. And before you write your “well, actually” response… you can’t get mad at people for conflating all the different types of AI, when you purposely threw them all together to build your hype cycle. You did this to yourselves.

I don’t know which parts of AI will survive, but they’re mostly likely not in generative AI. Turns out people like making things.

Silicon Valley’s era of innovation is over. This is their villain era. The era of the con. Having bled themselves dry of ideas, and all sense of moral decency, they’re now attempting to bleed us dry of our own humanity. And lest you think I’m being cynical, my cynicism towards technology comes from a belief in people. I believe that people are capable of good things. I believe people are even capable of great things. I believe that people make great art. I believe that people enjoy making all types of art. I believe that people write amazing things. (People don’t save each other’s love letters because they’re great literature.) I believe that people, at their best, want to communicate, not just with each other in the here and now, but also with those that will hopefully come after us. We want our descendents to know we were here, we want them to know we made things, we want them to know that we talked funny (our descendents will think we talked funny.)

I know this because I’ve seen us do this. I’ve seen us examine the past. I’ve seen us look for evidence of our ancestors. (I’ve also seen us hide evidence of our ancestors.) I’ve seen us gather in museums to see the art our ancestors made. I’ve seen us gather in movie houses to see the movies our ancestors made. Every Nina Simone song. Every Velvet Underground album. Every Ibsen play. Every Cindy Sherman photo. Every Greek myth. Every letter written from a Birmingham jail cell. Every note from Coltrane’s saxophone. It’s the indestructible beat of humankind. Calling from the past to let us know that we love to make ourselves heard, seen, felt and touched.

It’s what we do.

🙋 Got a question? Ask it! I might answer it. Or more likely, pretend to answer it while writing about what’s already swimming in my head.

📣 There’s a few slots left in next week’s Presenting w/Confidence workshop. You should sign up.

🤖 Speaking of AI, here’s an excellent article about why all the tech leaders decided to be nazis.

💸 If you’re enjoying the newsletter and can spare $2/month, I will take it!

🧺 Here’s a very stupid and sexy enamel pin you can buy.

🍉 Please donate to the Palestinian Children’s Relief Fund.

🏳️⚧️ …and to Trans Lifeline.

🚰 Say hello. I love hearing from you.